I’ve talked before about utilizing high-pass filtering to roll-off undesirable low frequencies in audio. This can be done in software via plugins or done in hardware (E.g., on a mixing console or microphone preamp). I suggest reading our primer on high-pass filtering before proceeding with today’s topic, which is phase shifting.

What happens to audio when processed through a high-pass filter?

So what exactly is phase shifting? Well, let’s first understand what happens to an audio signal as it travels through a high-pass filter. In the example below, you can see I’m rolling off frequencies below 75 Hz at 12dB per octave. High-pass filers typically allow the user to specify just how much is rolled off below the frequency cut off. The higher the decibel value is, the slop increases and a greater roll off occurs. Look at the screenshot above and you’ll see the slope.

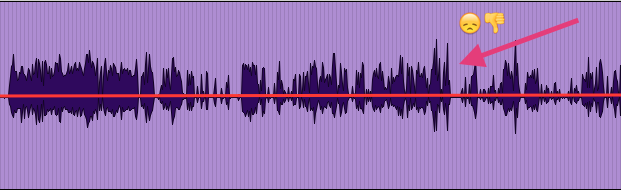

After applying the filter, you can see that on one axis, the energy of the waveform is displaced. The human voice is often said to be asymmetrical in nature, however high-pass filters can further increase asymmetry. This does not indicate a problem necessarily with the sound quality, but does pose challenges for podcast audio producers as it decreases headroom.

What is headroom?

Headroom is essentially the “wiggle room” you have as an engineer when processing audio before the source exceeds zero decibels relative to full scale (dBFS). Decibels relative to full scale (dBFS) is a unit of measurement for amplitude levels in digital systems, which have a defined maximum peak level. In the digital realm, 0dBFS is the absolute peak ceiling before audible clipping occurs (or distortion as it’s also known).

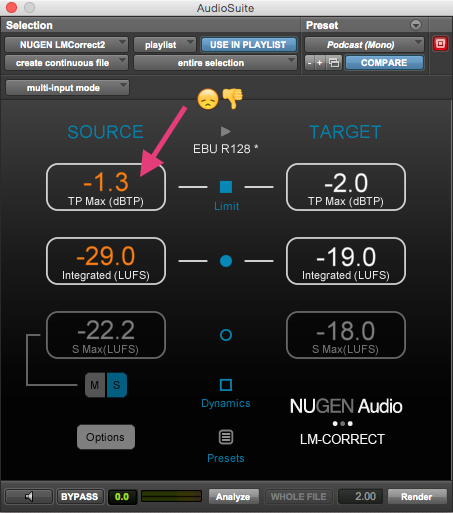

Now that you understand basic terminology, you see why displaced energy in a waveform can decrease headroom, creating processing challenges as you prepare for loudness normalization. Loudness normalization is an essential step after optimizing your audio (applying compression) as it targets optimal perceived average loudness. This creates consistency for the listener so they don’t need to crank the volume in louder environments and also aids intelligibility of spoken word. If you were to check the maximum true peak of your audio, pre phase shift, you’ll notice the loss of headroom. In the example below, the source checks in at -1.3dB. This is not the worst example, but you’ll get a better idea later on as we shift the phase of the source.

To better understand loudness normalization, please read “Loudness Compliance And Podcasts” and “Your Podcast Might Be Too Quiet Or Too Loud“.

Optimizing headroom through phase shifting

To restore headroom in your audio source after high-pass filtering, you can use third party tools to do so. This demonstration uses Waves’ InPhase plugin, however other tools such as iZotope RX suite contains an adaptive phase correction feature that is even easier.

Step 1.

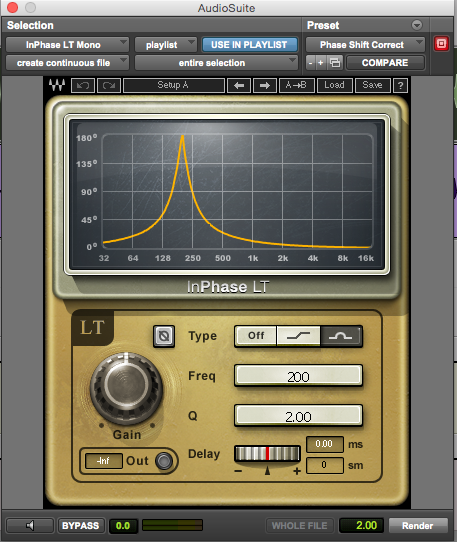

My Digital Audio Workstation (DAW) of choice is Pro Tools. On a mono audio source, I select the clip and instantiate the LT (non live) version of Waves InPhase. I have created a default preset to handle phase shift correction, which you can download from here. Note that this preset is meant as a starting point, so values such as input gain and delay may be tweaked depending on the source you’re working with.

Here’s how I have the plugin configured:

As illustrated in the screenshot, you can set the all-pass frequency 200 Hz and Q bandwidth to 2.0. ” Type” sets the phase shift filter type, and toggles between Off, Shelf (using the 1st order allpass filter) and Bell (using the 2nd order allpass filter.) In the example we use the bell filter type. Allpass filters to correct phasing issues between tracks. Here’s a quick rundown of what these settings are doing, as documented in the plugin manual:

An allpass filter is a signal processing filter that affects only the phase, not the amplitude of the frequency-response of a signal. This example demonstrates using a 2nd order filter, which is defined by the frequency at which the phase shift is 180° and by the rate of phase change. The rate of the phase change is dictated by the Q factor. Q sets the width of the 2nd order (Bell) allpass filter: A narrower Q results in a faster phase transition toward the selected frequency, leaving a larger portion of the frequency intact.

The InPhase settings came recommended by Paul Figgiani, but they’re meant as a starting point. The significance of the settings are fairly complex. I will continue to explore their significance.

Step 2:

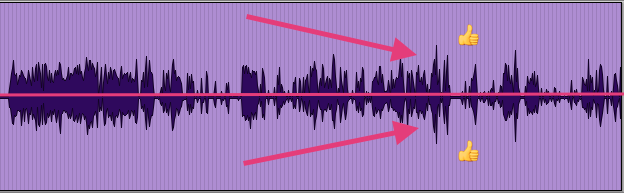

Clicking “Render” will process the selected audio and should shift the phase slightly so that the energy of the waveform isn’t lopsided.

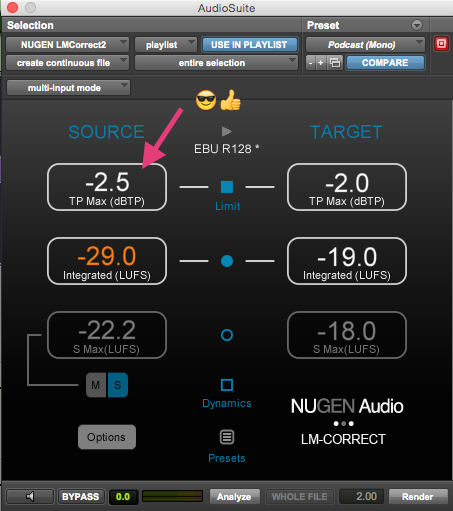

Now that the phase has been shifted slightly in the correct direction, we have an optimal source to work with for loudness normalization. When the process was started, our our source checked in at -1.3dB. Analyzing the corrected version below, you can see we’ve restored quite a bit of headroom.

Hot damn, look at that. Our source is now -2.5dB, a full 1.2dB of headroom recouped!

Step 3: Loudness normalization can be done on the source audio with the phase shifted in the correct direction. Headroom has been regained, which means when processing the audio with a true peak limiter, excessive limiting should not occur (this is a good thing as that can squash the dynamics of the human voice).

#Podcast editing tips: Phase shifting audio to recoup loss of headroom

Click To Tweet

Conclusion

You should have a good understanding how most high-pass filters affect the human voice. It should be noted that the source audio worked with in these examples was high-pass filtered in hardware, not software. The reason why this matters is because linear phase filtering helps avoid the displaced waveform energy discussed, provided the source was not already high-pass filtered in hardware. Linear phase filters are a topic for another day, but effectively they ensure the original phase is not altered in any way during processing.

If you have any questions about how the aforementioned workflow is accomplished, don’t hesitate to reach out to us on Twitter and Facebook.

Acknowledgements

Many thanks to audio engineer Paul Figgiani, whose experience and breadth of knowledge rivals my own. He continues to be an inspiration and aided in the accuracy of this piece. Make sure to check out his blog: ProduceNewMedia.com.

The post Podcast Editing Tips: Part 3 – Phase Shifting to Recoup Loss of Headroom appeared first on FeedPress – RSS analytics and podcast hosting, done right….

![]()